What, why?

This is a collection of various blog posts I've transcribed. The reason I am bothering is

- Its really common for blogs to go unmaintained and then poof i've lost that info.

- I can start to organize and search over stuff better this way. No more "oh, what was that random blog from 10 years ago that talked about this thing?" for me.

I haven't and won't transcribe each and every link in the posts, so some references might become dead, but I am going to do my best to make this useful on its own.

In progress

Links I still need to do

- https://web.archive.org/web/20200423065845/https://blog.reactioncommerce.com/how-to-implement-graphql-pagination-and-sorting/

- https://tomassetti.me/antlr-mega-tutorial/

- https://mattferraro.dev/posts/poissons-equation

- https://libertysys.com.au/2016/09/the-school-for-sysadmins-who-cant-timesync-good-and-wanna-learn-to-do-other-stuff-good-too-part-1-the-problem-with-ntp/

- https://blog.crunchydata.com/blog/better-json-in-postgres-with-postgresql-14

- https://javahippie.net/clojure/apm/2021/05/29/tracing-with-alter-var-root.html

- https://www.toptal.com/java/how-hibernate-ruined-my-career

- https://www.sorcerers-tower.net/articles/configuring-jetty-for-https-with-letsencrypt

- https://quarkus.io/version/1.7/guides/optaplanner

- https://reactnative.dev/docs/testing-overview

- https://antonz.org/python-packaging/

- https://jessewarden.com/2018/06/functional-programming-unit-testing-in-node-part-1.html

- https://malloc.se/blog/zgc-jdk16

- https://gigasquidsoftware.com/blog/2021/03/15/breakfast-with-zero-shot-nlp/

- https://beepb00p.xyz/sad-infra.html#why_search

- https://www.infoworld.com/article/2074186/j2ee-or-j2se--jndi-works-with-both.html

- https://trenki2.github.io/blog/2017/06/02/using-sdl2-with-cmake/

- https://blog.discord.com/why-discord-is-switching-from-go-to-rust-a190bbca2b1f

- https://suade.org/dev/12-requests-per-second-with-python/

- https://rustyyato.github.io/type/system,type/families/2021/02/15/Type-Families-1.html

- https://tanelpoder.com/posts/reasons-why-select-star-is-bad-for-sql-performance/

- https://www.compose.com/articles/postgresql-tips-documenting-the-database/

- https://chriskiehl.com/article/thoughts-after-6-years

- https://www.marcolancini.it/offensive-infrastructure/

- https://dashbit.co/blog/you-may-not-need-redis-with-elixir

- https://www.imperva.com/learn/performance/cache-control/

- https://www.smashingmagazine.com/2016/09/the-thumb-zone-designing-for-mobile-users/

- https://codahale.com/how-to-safely-store-a-password/

- https://vmlens.com/articles/cp/4_atomic_updates/

- https://tomcam.github.io/postgres/

- https://wozniak.ca/blog/2014/08/03/1/

- https://blog.brunobonacci.com/2014/11/16/clojure-complete-guide-to-destructuring/

- https://www.teamten.com/lawrence/programming/use-singular-nouns-for-database-table-names.html

- http://highscalability.com/blog/2014/9/8/how-twitter-uses-redis-to-scale-105tb-ram-39mm-qps-10000-ins.html

- https://cran.r-project.org/web/packages/viridis/vignettes/intro-to-viridis.html

- https://acoup.blog/2020/09/18/collections-iron-how-did-they-make-it-part-i-mining/

- https://inside.java/2020/08/07/loom-performance/

- https://cssfordesigners.com/articles/things-i-wish-id-known-about-css

- https://alexwlchan.net/2018/01/downloading-sqs-queues/

- https://web.archive.org/web/20201031072102/https://akshayr.me/blog/articles/python-dictionaries

- https://abramov.io/rust-dropping-things-in-another-thread

- https://blog.kevmod.com/2020/05/python-performance-its-not-just-the-interpreter/

- https://medium.com/teamzerolabs/5-aws-services-you-should-avoid-f45111cc10cd

- https://www.phoenixframework.org/blog/build-a-real-time-twitter-clone-in-15-minutes-with-live-view-and-phoenix-1-5

- https://grahamc.com/blog/erase-your-darlings

- https://www.alibabacloud.com/blog/how-to-deploy-apps-effortlessly-with-packer-and-terraform_593894

- https://vlaaad.github.io/year-of-clojure-on-the-desktop

- https://fasterthanli.me/articles/i-want-off-mr-golangs-wild-ride

- https://www.forrestthewoods.com/blog/memory-bandwidth-napkin-math/

- https://m.signalvnoise.com/only-15-of-the-basecamp-operations-budget-is-spent-on-ruby/

- https://www.mattlayman.com/blog/2019/failed-saas-postmortem

- https://sujithjay.com/data-systems/dynamo-cassandra/

- http://gustavlundin.com/di-frameworks-are-dynamic-binding/

- https://evilmartians.com/chronicles/graphql-on-rails-1-from-zero-to-the-first-query

- https://medium.com/@cep21/after-using-both-i-regretted-switching-from-terraform-to-cloudformation-8a6b043ad97a

- https://jvns.ca/blog/2019/10/03/sql-queries-don-t-start-with-select/

- https://vas3k.com/blog/computational_photography/

- https://blog.pragmaticengineer.com/software-architecture-is-overrated/

- https://web.archive.org/web/20190323123400/https://codahale.com/downloads/email-to-donald.txt

- https://www.dynamodbguide.com/the-dynamo-paper

- https://blog.sulami.xyz/posts/why-i-like-clojure/

- https://medium.com/javascript-scene/mocking-is-a-code-smell-944a70c90a6a

- https://medium.com/airbnb-engineering/sunsetting-react-native-1868ba28e30a

- https://dropbox.tech/mobile/the-not-so-hidden-cost-of-sharing-code-between-ios-and-android

- https://phoboslab.org/log/2019/06/pl-mpeg-single-file-library

- http://cachestocaches.com/2019/8/myths-list-antipattern/

- https://code.thheller.com/blog/shadow-cljs/2018/06/15/why-not-webpack.html

- https://snow-dev.com/posts/ecs-cd-with-codepipeline-in-terraform.html

- https://www.cloudbees.com/blog/terraforming-your-docker-environment-on-aws

- https://blog.danslimmon.com/2019/07/15/do-nothing-scripting-the-key-to-gradual-automation/

- https://blog.gruntwork.io/an-introduction-to-terraform-f17df9c6d180

- https://boats.gitlab.io/blog/post/notes-on-a-smaller-rust/

- https://medium.com/@thi.ng/how-to-ui-in-2018-ac2ae02acdf3

- https://jessicagreben.medium.com/how-to-terraform-locking-state-in-s3-2dc9a5665cb6

- https://ferd.ca/ten-years-of-erlang.html

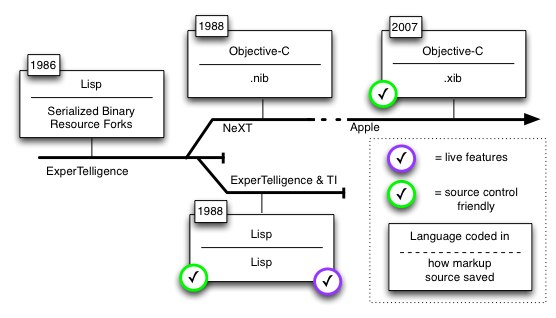

- https://paulhammant.com/2013/03/28/interface-builders-alternative-lisp-timeline/

- http://blog.pnkfx.org/blog/2019/06/26/breaking-news-non-lexical-lifetimes-arrives-for-everyone/

- https://itnext.io/updating-an-sql-database-schema-using-node-js-6c58173a455a

- https://web.archive.org/web/20210218084259/https://expeditedsecurity.com/aws-in-plain-english/

- https://datawhatnow.com/things-you-are-probably-not-using-in-python-3-but-should/

- http://blogs.tedneward.com/post/the-vietnam-of-computer-science/

- https://vorpus.org/blog/why-im-not-collaborating-with-kenneth-reitz/

- https://yourlabs.org/posts/2019-04-19-storing-hd-photos-in-a-relational-database-recipe-for-an-epic-fail/

- https://slack.engineering/evolving-api-pagination-at-slack/

- https://matthewrayfield.com/articles/animating-urls-with-javascript-and-emojis/#%F0%9F%8C%95%F0%9F%8C%95%F0%9F%8C%95%F0%9F%8C%95%F0%9F%8C%95%F0%9F%8C%95%F0%9F%8C%95%F0%9F%8C%98%F0%9F%8C%91%F0%9F%8C%9101:22%E2%95%B101:50

- http://www.beyondthelines.net/databases/dynamodb-vs-cassandra/

- https://blog.bloomca.me/2019/02/23/alternatives-to-jsx.html

- https://iamturns.com/typescript-babel/

- https://blog.haschek.at/2018/the-curious-case-of-the-RasPi-in-our-network.html

- https://jrheard.tumblr.com/post/43575891007/explorations-in-clojures-corelogic

- https://www.theguardian.com/info/2018/nov/30/bye-bye-mongo-hello-postgres

- https://www.cloudbees.com/blog/tuning-nginx

- https://blogs.oracle.com/javamagazine/java-builder-pattern-bloch?source=:so:tw:or:awr:jav:::&SC=:so:tw:or:awr:jav:::&pcode=

- https://www.kode-krunch.com/2021/07/hibernate-traps-transactional.html

- https://www.kode-krunch.com/2021/06/hibernate-traps-leaky-abstraction.html

- https://www.kode-krunch.com/2021/05/storing-read-optimized-trees-in.html

- https://www.kode-krunch.com/2021/05/creating-simple-yet-powerful-expression.html

- https://www.kode-krunch.com/2021/05/dealing-with-springs-reactive-webclient.html

- https://chriswarrick.com/blog/2018/07/17/pipenv-promises-a-lot-delivers-very-little/#

- https://blog.cleancoder.com/uncle-bob/2016/05/01/TypeWars.html

- https://paulhammant.com/2013/02/04/the-importance-of-the-dom

- https://adambard.com/blog/easy-auth-with-friend/

- https://madattheinternet.com/2021/07/08/where-the-sidewalk-ends-the-death-of-the-internet/

- https://blog.discourse.org/2021/07/faster-user-uploads-on-discourse-with-rust-webassembly-and-mozjpeg

- https://www.notamonadtutorial.com/clojerl-an-implementation-of-the-clojure-language-that-runs-on-the-beam/

- https://trekhleb.dev/blog/2021/binary-floating-point/

- https://www.juxt.pro/blog/maven-central

- https://www.juxt.pro/blog/bitemporality-more-than-a-design-pattern

- https://www.juxt.pro/blog/json-in-clojure

- https://opencrux.com/main/index.html

- https://eli.thegreenplace.net/2017/clojure-concurrency-and-blocking-with-coreasync/

- https://martintrojer.github.io/clojure/2013/07/07/coreasync-and-blocking-io

- https://blog.frankel.ch/start-rust/7/

- https://adambard.com/blog/easy-auth-with-friend/

- https://crisal.io/words/2020/02/28/C++-rust-ffi-patterns-1-complex-data-structures.html

- https://www.pixelstech.net/article/1582964859-How-many-bytes-a-boolean-value-takes-in-Java

- http://www.johngustafson.net/pdfs/BeatingFloatingPoint.pdf

- https://erlang.org/download/armstrong_thesis_2003.pdf

- https://bien.ee/a-contenteditable-pasted-garbage-and-caret-placement-walk-into-a-pub/

- https://corfield.org/blog/2021/07/21/deps-edn-monorepo-4/

- https://world.hey.com/dhh/modern-web-apps-without-javascript-bundling-or-transpiling-a20f2755

- https://stegosaurusdormant.com/understanding-derive-clone/

- https://www.2ndquadrant.com/en/blog/postgresql-anti-patterns-unnecessary-jsonhstore-dynamic-columns/

- https://opencrux.com/blog/crux-strength-of-the-record.html

- https://tenthousandmeters.com/blog/python-behind-the-scenes-12-how-asyncawait-works-in-python/

- https://www.infoq.com/presentations/Impedance-Mismatch/

- https://albertnetymk.github.io/2021/08/03/template_interpreter/

https://lukeplant.me.uk/blog/posts/yagni-exceptions/

YAGNI exceptions

by Luke Plant Posted in: Software development — June 29, 2021 at 09:42

I’m essentially a believer in You Aren’t Gonna Need It — the principle that you should add features to your software — including generality and abstraction — when it becomes clear that you need them, and not before.

However, there are some things which really are easier to do earlier than later, and where natural tendencies or a ruthless application of YAGNI might neglect them. This is my collection so far:

-

Applications of Zero One Many. If the requirements go from saying “we need to be able to store an address for each user”, to “we need to be able to store two addresses for each user”, 9 times out of 10 you should go straight to “we can store many addresses for each user”, with a soft limit of two for the user interface only, because there is a very high chance you will need more than two. You will almost certainly win significantly by making that assumption, and even if you lose it won’t be by much.

-

Versioning. This can apply to protocols, APIs, file formats etc. It is good to think about how, for example, a client/server system will detect and respond to different versions ahead of time (i.e. even when there is only one version), especially when you don’t control both ends or can’t change them together, because it is too late to think about this when you find you need a version 2 after all. This is really an application of Embrace Change, which is a principle at the heart of YAGNI.

-

Logging. Especially for after-the-fact debugging, and in non-deterministic or hard to reproduce situations, where it is often too late to add it after you become aware of a problem.

-

Timestamps.

For example, creation timestamps, as Simon Willison tweeted:

A lesson I re-learn on every project: always have an automatically populated “created_at” column on every single database table. Any time you think “I won’t need it here” you’re guaranteed to want to use it for debugging something a few weeks later.

More generally, instead of a boolean flag, e.g. completed, a nullable timestamp of when the state was entered, completed_at, can be much more useful.

-

Generalising from the “logging” and “timestamps” points, collecting a bit more data than you need right now is usually not a problem (unless it is personal or otherwise sensitive data), because you can always throw it away. But if you never collected it, it’s gone forever. I have won significantly when I’ve anticipated the need for auditing which wasn’t completely explicit in the requirements, and I’ve lost significantly when I’ve gone for data minimalism which lost key information and limited what I could do with the data later.

-

A relational database.

By this I mean, if you need a database at all, you should jump to having a relational one straight away, and default to a relational schema, even if your earliest set of requirements could be served by a “document database” or some basic flat-file system. Most data is relational by nature, and a non-relational database is a very bad default for almost all applications.

If you choose a relational database like PostgreSQL, and it later turns out a lot of your data is “document like”, you can use its excellent support for JSON.

However, if you choose a non-rel DB like MongoDB, even when it seems like you’ve got a perfect fit in terms of current schema needs, most likely a new, “simple” requirement will cause you a lot of pain, and prompt a rewrite in Postgres (see sections “How MongoDB Stores Data” and “Epilogue” in that article).

I thought a comment on Lobsters I read the other day was insightful here:

I wonder if the reason that “don’t plan, don’t abstract, don’t engineer for the future” is such good advice is that most people are already building on top of highly-abstracted and featureful platforms, which don’t need to be abstracted further?

We can afford to do YAGNI only when the systems we are working with are malleable and featureful. Relational databases are extremely flexible systems that provide insurance against future requirements changes. For example, my advice in the previous section implicitly depends on the fact that removing data you don’t need can be as simple as “DROP COLUMN”, which is almost free (well, sometimes…).

That’s my list so far, I’ll probably add to it over time. Do you agree? What did I miss?

Links

Discussion of this post on Twitter

Commit messages guide

What is a "commit"?

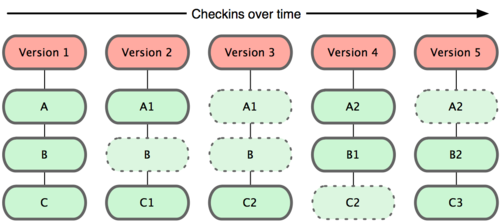

In simple terms, a commit is a snapshot of your local files, written in your local repository. Contrary to what some people think, git doesn't store only the difference between the files, it stores a full version of all files. For files that didn't change from one commit to another, git stores just a link to the previous identical file that is already stored.

The image below shows how git stores data over time, in which each "Version" is a commit:

Why are commit messages important?

- To speed up and streamline code reviews

- To help in the understanding of a change

- To explain "the whys" that cannot be described only with code

- To help future maintainers figure out why and how changes were made, making troubleshooting and debugging easier

To maximize those outcomes, we can use some good practices and standards described in the next section.

Good practices

These are some practices collected from my experiences, internet articles, and other guides. If you have others (or disagree with some) feel free to open a Pull Request and contribute.

Use imperative form

# Good

Use InventoryBackendPool to retrieve inventory backend

# Bad

Used InventoryBackendPool to retrieve inventory backend

But why use the imperative form?

A commit message describes what the referenced change actually does, its effects, not what was done.

This excellent article from Chris Beams gives us a simple sentence that can be used to help us write better commit messages in imperative form:

If applied, this commit will <commit message>

Examples:

# Good

If applied, this commit will use InventoryBackendPool to retrieve inventory backend

# Bad

If applied, this commit will used InventoryBackendPool to retrieve inventory backend

Capitalize the first letter

# Good

Add `use` method to Credit model

# Bad

add `use` method to Credit model

The reason that the first letter should be capitalized is to follow the grammar rule of using capital letters at the beginning of sentences.

The use of this practice may vary from person to person, team to team, or even from language to language. Capitalized or not, an important point is to stick to a single standard and follow it.

Try to communicate what the change does without having to look at the source code

# Good

Add `use` method to Credit model

# Bad

Add `use` method

# Good

Increase left padding between textbox and layout frame

# Bad

Adjust css

It is useful in many scenarios (e.g. multiple commits, several changes and refactors) to help reviewers understand what the committer was thinking.

Use the message body to explain "why", "for what", "how" and additional details

# Good

Fix method name of InventoryBackend child classes

Classes derived from InventoryBackend were not

respecting the base class interface.

It worked because the cart was calling the backend implementation

incorrectly.

# Good

Serialize and deserialize credits to json in Cart

Convert the Credit instances to dict for two main reasons:

- Pickle relies on file path for classes and we do not want to break up

everything if a refactor is needed

- Dict and built-in types are pickleable by default

# Good

Add `use` method to Credit

Change from namedtuple to class because we need to

setup a new attribute (in_use_amount) with a new value

The subject and the body of the messages are separated by a blank line. Additional blank lines are considered as a part of the message body.

Characters like -, * and ` are elements that improve readability.

Avoid generic messages or messages without any context

# Bad

Fix this

Fix stuff

It should work now

Change stuff

Adjust css

Limit the number of characters

It's recommended to use a maximum of 50 characters for the subject and 72 for the body.

Keep language consistency

For project owners: Choose a language and write all commit messages using that language. Ideally, it should match the code comments, default translation locale (for localized projects), etc.

For contributors: Write your commit messages using the same language as the existing commit history.

# Good

ababab Add `use` method to Credit model

efefef Use InventoryBackendPool to retrieve inventory backend

bebebe Fix method name of InventoryBackend child classes

# Good (Portuguese example)

ababab Adiciona o método `use` ao model Credit

efefef Usa o InventoryBackendPool para recuperar o backend de estoque

bebebe Corrige nome de método na classe InventoryBackend

# Bad (mixes English and Portuguese)

ababab Usa o InventoryBackendPool para recuperar o backend de estoque

efefef Add `use` method to Credit model

cdcdcd Agora vai

Template

This is a template, written originally by Tim Pope, which appears in the Pro Git Book.

Summarize changes in around 50 characters or less

More detailed explanatory text, if necessary. Wrap it to about 72

characters or so. In some contexts, the first line is treated as the

subject of the commit and the rest of the text as the body. The

blank line separating the summary from the body is critical (unless

you omit the body entirely); various tools like `log`, `shortlog`

and `rebase` can get confused if you run the two together.

Explain the problem that this commit is solving. Focus on why you

are making this change as opposed to how (the code explains that).

Are there side effects or other unintuitive consequences of this

change? Here's the place to explain them.

Further paragraphs come after blank lines.

- Bullet points are okay, too

- Typically a hyphen or asterisk is used for the bullet, preceded

by a single space, with blank lines in between, but conventions

vary here

If you use an issue tracker, put references to them at the bottom,

like this:

Resolves: #123

See also: #456, #789

Rebase vs. Merge

This section is a TL;DR of Atlassian's excellent tutorial, "Merging vs. Rebasing".

Rebase

TL;DR: Applies your branch commits, one by one, upon the base branch, generating a new tree.

Merge

TL;DR: Creates a new commit, called (appropriately) a merge commit, with the differences between the two branches.

Why do some people prefer to rebase over merge?

I particularly prefer to rebase over merge. The reasons include:

- It generates a "clean" history, without unnecessary merge commits

- What you see is what you get, i.e., in a code review all changes come from a specific and entitled commit, avoiding changes hidden in merge commits

- More merges are resolved by the committer, and every merge change is in a commit with a proper message

- It's unusual to dig in and review merge commits, so avoiding them ensures all changes have a commit where they belong

When to squash

"Squashing" is the process of taking a series of commits and condensing them into a single commit.

It's useful in several situations, e.g.:

- Reducing commits with little or no context (typo corrections, formatting, forgotten stuff)

- Joining separate changes that make more sense when applied together

- Rewriting work in progress commits

When to avoid rebase or squash?

Avoid rebase and squash in public commits or in shared branches where multiple people work on. Rebase and squash rewrite history and overwrite existing commits, doing it on commits that are on shared branches (i.e., commits pushed to a remote repository or that comes from others branches) can cause confusion and people may lose their changes (both locally and remotely) because of divergent trees and conflicts.

Useful git commands

rebase -i

Use it to squash commits, edit messages, rewrite/delete/reorder commits, etc.

pick 002a7cc Improve description and update document title

pick 897f66d Add contributing section

pick e9549cf Add a section of Available languages

pick ec003aa Add "What is a commit" section"

pick bbe5361 Add source referencing as a point of help wanted

pick b71115e Add a section explaining the importance of commit messages

pick 669bf2b Add "Good practices" section

pick d8340d7 Add capitalization of first letter practice

pick 925f42b Add a practice to encourage good descriptions

pick be05171 Add a section showing good uses of message body

pick d115bb8 Add generic messages and column limit sections

pick 1693840 Add a section about language consistency

pick 80c5f47 Add commit message template

pick 8827962 Fix triple "m" typo

pick 9b81c72 Add "Rebase vs Merge" section

# Rebase 9e6dc75..9b81c72 onto 9e6dc75 (15 commands)

#

# Commands:

# p, pick = use commit

# r, reword = use commit, but edit the commit message

# e, edit = use commit, but stop for amending

# s, squash = use commit, but meld into the previous commit

# f, fixup = like "squash", but discard this commit's log message

# x, exec = run command (the rest of the line) using shell

# d, drop = remove commit

#

# These lines can be re-ordered; they are executed from top to bottom.

#

# If you remove a line here THAT COMMIT WILL BE LOST.

#

# However, if you remove everything, the rebase will be aborted.

#

# Note that empty commits are commented out

fixup

Use it to clean up commits easily and without needing a more complex rebase. This article has very good examples of how and when to do it.

cherry-pick

It is very useful to apply that commit you made on the wrong branch, without the need to code it again.

Example:

$ git cherry-pick 790ab21

[master 094d820] Fix English grammar in Contributing

Date: Sun Feb 25 23:14:23 2018 -0300

1 file changed, 1 insertion(+), 1 deletion(-)

add/checkout/reset [--patch | -p]

Let's say we have the following diff:

diff --git a/README.md b/README.md

index 7b45277..6b1993c 100644

--- a/README.md

+++ b/README.md

@@ -186,10 +186,13 @@ bebebe Corrige nome de método na classe InventoryBackend

``

# Bad (mixes English and Portuguese)

ababab Usa o InventoryBackendPool para recuperar o backend de estoque

-efefef Add `use` method to Credit model

cdcdcd Agora vai

``

+### Template

+

+This is a template, [written originally by Tim Pope](http://tbaggery.com/2008/04/19/a-note-about-git-commit-messages.html), which appears in the [_Pro Git Book_](https://git-scm.com/book/en/v2/Distributed-Git-Contributing-to-a-Project).

+

## Contributing

Any kind of help would be appreciated. Example of topics that you can help me with:

@@ -202,3 +205,4 @@ Any kind of help would be appreciated. Example of topics that you can help me wi

- [How to Write a Git Commit Message](https://chris.beams.io/posts/git-commit/)

- [Pro Git Book - Commit guidelines](https://git-scm.com/book/en/v2/Distributed-Git-Contributing-to-a-Project#_commit_guidelines)

+- [A Note About Git Commit Messages](https://tbaggery.com/2008/04/19/a-note-about-git-commit-messages.html)

We can use git add -p to add only the patches we want to, without the need to change the code that is already written.

It's useful to split a big change into smaller commits or to reset/checkout specific changes.

Stage this hunk [y,n,q,a,d,/,j,J,g,s,e,?]? s

Split into 2 hunks.

hunk 1

@@ -186,7 +186,6 @@

``

# Bad (mixes English and Portuguese)

ababab Usa o InventoryBackendPool para recuperar o backend de estoque

-efefef Add `use` method to Credit model

cdcdcd Agora vai

``

Stage this hunk [y,n,q,a,d,/,j,J,g,e,?]?

hunk 2

@@ -190,6 +189,10 @@

``

cdcdcd Agora vai

``

+### Template

+

+This is a template, [written originally by Tim Pope](http://tbaggery.com/2008/04/19/a-note-about-git-commit-messages.html), which appears in the [_Pro Git Book_](https://git-scm.com/book/en/v2/Distributed-Git-Contributing-to-a-Project).

+

## Contributing

Any kind of help would be appreciated. Example of topics that you can help me with:

Stage this hunk [y,n,q,a,d,/,K,j,J,g,e,?]?

hunk 3

@@ -202,3 +205,4 @@ Any kind of help would be appreciated. Example of topics that you can help me wi

- [How to Write a Git Commit Message](https://chris.beams.io/posts/git-commit/)

- [Pro Git Book - Commit guidelines](https://git-scm.com/book/en/v2/Distributed-Git-Contributing-to-a-Project#_commit_guidelines)

+- [A Note About Git Commit Messages](https://tbaggery.com/2008/04/19/a-note-about-git-commit-messages.html)

Other interesting stuff

- https://whatthecommit.com/

- https://gitmoji.carloscuesta.me/

Like it?

Contributing

Any kind of help would be appreciated. Example of topics that you can help me with:

- Grammar and spelling corrections

- Translation to other languages

- Improvement of source referencing

- Incorrect or incomplete information

Inspirations, sources and further reading

- How to Write a Git Commit Message

- Pro Git Book - Commit guidelines

- A Note About Git Commit Messages

- Merging vs. Rebasing

- Pro Git Book - Rewriting History

https://www.cloudbees.com/blog/understanding-dockers-cmd-and-entrypoint-instructions

Understanding Docker's CMD and ENTRYPOINT Instructions

Written by: Ben Cane June 21, 2017

When creating a Docker container, the goal is generally that anyone could simply execute docker run <containername> and launch the container. In today's article, we are going to explore two key Dockerfile instructions that enable us to do just that. Let's explore the differences between the CMD and ENTRYPOINT instructions.

On the surface, the CMD and ENTRYPOINT instructions look like they perform the same function. However, once you dig deeper, it's easy to see that these two instructions perform completely different tasks.

ApacheBench Dockerfile

To help serve as an example, we're going to create a Docker container that simply executes the ApacheBench utility.

In earlier articles, we discovered the simplicity and usefulness of the ApacheBench load testing tool. However, this type of command-line utility is not generally the type of application one would "Dockerize." The general usage of Docker is focused more on creating services rather than single execution tools like ApacheBench.

The main reason behind this is that typically Docker containers are not built to accept additional parameters when launching. This makes it tricky to use a command-line tool within a container.

Let's see this in action by creating a Docker container that can be used to execute ApacheBench against any site.

FROM ubuntu:latest

RUN apt-get update && \

apt-get install -y apache2-utils && \

rm -rf /var/lib/apt/lists/*

CMD ab

In the Dockerfile, we are simply using the ubuntu:latest image as our base container image, installing the apache2-utils package, and then defining that the command for this container is the ab command.

Since this Docker container is planned to be used as an executor for the ab command, it makes sense to set the CMD instruction value to the ab command. However, if we run this container we will start to see an interesting difference between this container and other application containers.

Before we can run this container, however, we first need to build it. We can do so with the docker build command.

$ docker build -t ab .

Sending build context to Docker daemon 2.048 kB

Step 1/3 : FROM ubuntu:latest

---> ebcd9d4fca80

Step 2/3 : RUN apt-get update && apt-get install -y apache2-utils && rm -rf /var/lib/apt/lists/*

---> Using cache

---> d9304ff09c98

Step 3/3 : CMD ab

---> Using cache

---> ecfc71e7fba9

Successfully built ecfc71e7fba9

When building this container, I tagged the container with the name of ab. This means we can simply launch this container via the name ab.

$ docker run ab

ab: wrong number of arguments

Usage: ab [options] [http[s]://]hostname[:port]/path

Options are:

-n requests Number of requests to perform

-c concurrency Number of multiple requests to make at a time

-t timelimit Seconds to max. to spend on benchmarking

This implies -n 50000

-s timeout Seconds to max. wait for each response

Default is 30 seconds

When we run the ab container, we get back an error from the ab command as well as usage details. The reason for this is that we defined the CMD instruction to the ab command without specifying any flags or target host to load test against. This CMDinstruction is used to define what command the container should execute when launched. Since we defined that as the abcommand without arguments, it executed the ab command without arguments.

However, like most command-line tools, that simply isn't how ab works. With ab, you need to specify what URL you wish to test against.

What we can do in order to make this work is override the CMD instruction when we launch the container. We can do this by adding the command and arguments we wish to execute at the end of the docker run command.

$ docker run ab ab http://bencane.com/

Benchmarking bencane.com (be patient).....done

Concurrency Level: 1

Time taken for tests: 0.343 seconds

Complete requests: 1

Failed requests: 0

Total transferred: 98505 bytes

HTML transferred: 98138 bytes

Requests per second: 2.92 [#/sec] (mean)

Time per request: 342.671 [ms] (mean)

Time per request: 342.671 [ms] (mean, across all concurrent requests)

Transfer rate: 280.72 [Kbytes/sec] received

When we add ab http://bencane.com to the end of our docker run command, we are able to override the CMD instruction and execute the ab command successfully. However, while we were successful, this process of overriding the CMD instruction is rather clunky.

ENTRYPOINT

This is where the ENTRYPOINT instruction shines. The ENTRYPOINT instruction works very similarly to CMD in that it is used to specify the command executed when the container is started. However, where it differs is that ENTRYPOINT doesn't allow you to override the command.

Instead, anything added to the end of the docker run command is appended to the command. To understand this better, let's go ahead and change our CMD instruction to the ENTRYPOINT instruction.

FROM ubuntu:latest

RUN apt-get update && \

apt-get install -y apache2-utils && \

rm -rf /var/lib/apt/lists/*

ENTRYPOINT ["ab"]

After editing the Dockerfile, we will need to build the image once again.

$ docker build -t ab .

Sending build context to Docker daemon 2.048 kB

Step 1/3 : FROM ubuntu:latest

---> ebcd9d4fca80

Step 2/3 : RUN apt-get update && apt-get install -y apache2-utils && rm -rf /var/lib/apt/lists/*

---> Using cache

---> d9304ff09c98

Step 3/3 : ENTRYPOINT ab

---> Using cache

---> aa020cfe0708

Successfully built aa020cfe0708

Now, we can run the ab container once again; however, this time, rather than specifying ab http://bencane.com, we can simply add http://bencane.com to the end of the docker run command.

$ docker run ab http://bencane.com/

Benchmarking bencane.com (be patient).....done

Concurrency Level: 1

Time taken for tests: 0.436 seconds

Complete requests: 1

Failed requests: 0

Total transferred: 98505 bytes

HTML transferred: 98138 bytes

Requests per second: 2.29 [#/sec] (mean)

Time per request: 436.250 [ms] (mean)

Time per request: 436.250 [ms] (mean, across all concurrent requests)

Transfer rate: 220.51 [Kbytes/sec] received

As the above example shows, we have now essentially turned our container into an executable. If we wanted, we could add additional flags to the ENTRYPOINT instruction to simplify a complex command-line tool into a single-argument Docker container.

Be careful with syntax

One imporant thing to call out about the ENTRYPOINT instruction is that syntax is critical. Technically, ENTRYPOINT supports both the ENTRYPOINT ["command"] syntax and the ENTRYPOINT command syntax. However, while both of these are supported, they have two different meanings and change how ENTRYPOINT works.

Let's change our Dockerfile to match this syntax and see how it changes our containers behavior.

FROM ubuntu:latest

RUN apt-get update && \

apt-get install -y apache2-utils && \

rm -rf /var/lib/apt/lists/*

ENTRYPOINT ab

With the changes made, let's build the container.

$ docker build -t ab .

Sending build context to Docker daemon 2.048 kB

Step 1/3 : FROM ubuntu:latest

---> ebcd9d4fca80

Step 2/3 : RUN apt-get update && apt-get install -y apache2-utils && rm -rf /var/lib/apt/lists/*

---> Using cache

---> d9304ff09c98

Step 3/3 : ENTRYPOINT ab

---> Using cache

---> bbfe2686a064

Successfully built bbfe2686a064

With the container built, let's run it again using the same options as before.

$ docker run ab http://bencane.com/

ab: wrong number of arguments

Usage: ab [options] [http[s]://]hostname[:port]/path

Options are:

-n requests Number of requests to perform

-c concurrency Number of multiple requests to make at a time

-t timelimit Seconds to max. to spend on benchmarking

This implies -n 50000

-s timeout Seconds to max. wait for each response

Default is 30 seconds

It looks like we are back to the same behavior as the CMD instruction. However, if we try to override the ENTRYPOINT we will see different behavior than when we overrode the CMD instruction.

$ docker run ab ab http://bencane.com/

ab: wrong number of arguments

Usage: ab [options] [http[s]://]hostname[:port]/path

Options are:

-n requests Number of requests to perform

-c concurrency Number of multiple requests to make at a time

-t timelimit Seconds to max. to spend on benchmarking

This implies -n 50000

-s timeout Seconds to max. wait for each response

Default is 30 seconds

With the ENTRYPOINT instruction, it is not possible to override the instruction during the docker run command execution like we are with CMD. This highlights another usage of ENTRYPOINT, as a method of ensuring that a specific command is executed when the container in question is started regardless of attempts to override the ENTRYPOINT.

Summary

In this article, we covered quite a bit about CMD and ENTRYPOINT; however, there are still additional uses of these two instructions that allow you to customize how a Docker container starts. To see some of these examples, you can take a look at Docker's Dockerfilereference docs.

With the above example however, we now have a way to "Dockerize" simple command-line tools such as ab, which opens up quite a few interesting use cases. If you have one, feel free to share it in the comments below.

https://scattered-thoughts.net/writing/against-sql

Against SQL

Jamie Brandon, Last updated 2021-07-09

TLDR

The relational model is great:

- A shared universal data model allows cooperation between programs written in many different languages, running on different machines and with different lifespans.

- Normalization allows updating data without worrying about forgetting to update derived data.

- Physical data independence allows changing data-structures and query plans without having to change all of your queries.

- Declarative constraints clearly communicate application invariants and are automatically enforced.

- Unlike imperative languages, relational query languages don't have false data dependencies created by loop counters and aliasable pointers. This makes relational languages:

- A good match for modern machines. Data can be rearranged for more compact layouts, even automatic compression. Operations can be reordered for high cache locality, pipeline-friendly hot loops, simd etc.

- Amenable to automatic parallelization.

- Amenable to incremental maintenance.

But SQL is the only widely-used implementation of the relational model, and it is:

This isn't just a matter of some constant programmer overhead, like SQL queries taking 20% longer to write. The fact that these issues exist in our dominant model for accessing data has dramatic downstream effects for the entire industry:

- Complexity is a massive drag on quality and innovation in runtime and tooling

- The need for an application layer with hand-written coordination between database and client renders useless most of the best features of relational databases

The core message that I want people to take away is that there is potentially a huge amount of value to be unlocked by replacing SQL, and more generally in rethinking where and how we draw the lines between databases, query languages and programming languages.

Inexpressive

Talking about expressiveness is usually difficult, since it's a very subjective measure. But SQL is a particularly inexpressive language. Many simple types and computations can't be expressed at all. Others require far more typing than they need to. And often the structure is fragile - small changes to the computation can require large changes to the code.

Can't be expressed

Let's start with the easiest examples - things that can't be expressed in SQL at all.

For example, SQL:2016 added support for json values. In most languages json support is provided by a library. Why did SQL have to add it to the language spec?

First, while SQL allows user-defined types, it doesn't have any concept of a sum type. So there is no way for a user to define the type of an arbitrary json value:

#![allow(unused)] fn main() { enum Json { Null, Bool(bool), Number(Number), String(String), Array(Vec<Value>), Object(Map<String, Value>), } }

The usual response to complaints about the lack of sum types in sql is that you should use an id column that joins against multiple tables, one for each possible type.

create table json_value(id integer);

create table json_bool(id integer, value bool)

create table json_number(id integer, value double);

create table json_string(id integer, value text);

create table json_array(id integer);

create table json_array_elements(id integer, position integer, value json_value, foreign key (value) references json_value(id));

create table json_object(id integer);

create table json_object_properties(id integer, key text, value json_value, foreign key (value) references json_value(id));

This works for data modelling (although it's still clunky because you must try joins against each of the tables at every use site rather than just ask the value which table it refers to). But this solution is clearly inappropriate for modelling a value like json that can be created inside scalar expressions, where inserts into some global table are not allowed.

Second, parsing json requires iteration. SQLs with recursive is limited to linear recursion and has a bizarre choice of semantics - each step can access only the results from the previous step, but the result of the whole thing is the union of all the steps. This makes parsing, and especially backtracking, difficult. Most SQL databases also have a procedural sublanguage that has explicit iteration, but there are few commonalities between the languages in different databases. So there is no pure-SQL json parser that works across different databases.

Third, most databases have some kind of extension system that allows adding new types and functions using a regular programming language (usually c). Indeed, this is how json support first appeared in many databases. But again these extension systems are not at all standardized so it's not feasible to write a library that works across many databases.

So instead the best we can do is add json to the SQL spec and hope that all the databases implement it in a compatible way (they don't).

The same goes for xml, regular expressions, windows, multi-dimensional arrays, periods etc.

Compare how flink exposes windowing:

- The interface is made out of objects and function calls, both of which are first-class values and can be stored in variables and passed as function arguments.

- The style of windowing is defined by a WindowAssigner which simply takes a row and returns a set of window ids.

- Several common styles of windows are provided as library code.

Vs SQL:

- The interface adds a substantial amount of new syntax to the language.

- The windowing style is purely syntactic - it is not a value that can be assigned to a variable or passed to a function. This means that we can't compress common windowing patterns.

- Only a few styles of windowing are provided and they are hard-coded into the language.

Why is the SQL interface defined this way?

Much of this is simply cultural - this is just how new SQL features are designed.

But even if we wanted to mimic the flink interface we couldn't do it in SQL.

- Functions are not values that can be passed around, and they can't take tables or other functions as arguments. So complex operations such as windowing can't be added as stdlib functions.

- Without sum types we can't even express the hardcoded windowing styles as a value. So we're forced to add new syntax whenever we want to parameterize some operation with several options.

Verbose to express

Joins are at the heart of the relational model. SQL's syntax is not unreasonable in the most general case, but there are many repeated join patterns that deserve more concise expression.

By far the most common case for joins is following foreign keys. SQL has no special syntax for this:

select foo.id, quux.value

from foo, bar, quux

where foo.bar_id = bar.id and bar.quux_id = quux.id

Compare to eg alloy, which has a dedicated syntax for this case:

foo.bar.quux

Or libraries like pandas or flink, where it's trivial to write a function that encapsulates this logic:

fk_join(foo, 'bar_id', bar, 'quux_id', quux)

Can we write such a function in sql? Most databases don't allow functions to take tables as arguments, and also require the column names and types of the input and output tables to be fixed when the function is defined. SQL:2016 introduced polymorphic table functions, which might allow writing something like fk_join but so far only oracle has implemented them (and they didn't follow the spec!).

Verbose syntax for such core operations has chilling effects downstream, such as developers avoiding 6NF even in situations where it's useful, because all their queries would balloon in size.

Fragile structure

There are many cases where a small change to a computation requires totally changing the structure of the query, but subqueries are my favourite because they're the most obvious way to express many queries and yet also provide so many cliffs to fall off.

-- for each manager, find their employee with the highest salary

> select

> manager.name,

> (select employee.name

> from employee

> where employee.manager = manager.name

> order by employee.salary desc

> limit 1)

> from manager;

name | name

-------+------

alice | bob

(1 row)

-- what if we want to return the salary too?

> select

> manager.name,

> (select employee.name, employee.salary

> from employee

> where employee.manager = manager.name

> order by employee.salary desc

> limit 1)

> from manager;

ERROR: subquery must return only one column

LINE 3: (select employee.name, employee.salary

^

-- the only solution is to change half of the lines in the query

> select manager.name, employee.name, employee.salary

> from manager

> join lateral (

> select employee.name, employee.salary

> from employee

> where employee.manager = manager.name

> order by employee.salary desc

> limit 1

> ) as employee

> on true;

name | name | salary

-------+------+--------

alice | bob | 100

(1 row)

This isn't terrible in such a simple example, but in analytics it's not uncommon to have to write queries that are hundreds of lines long and have many levels of nesting, at which point this kind of restructuring is laborious and error-prone.

Incompressible

Code can be compressed by extracting similar structures from two or more sections. For example, if a calculation was used in several places we could assign it to a variable and then use the variable in those places. Or if the calculation depended on different inputs in each place, we could create a function and pass the different inputs as arguments.

This is programming 101 - variables, functions and expression substitution. How does SQL fare on this front?

Variables

Scalar values can be assigned to variables, but only as a column inside a relation. You can't name a thing without including it in the result! Which means that if you want a temporary scalar variable you must introduce a new select to get rid off it. And also name all your other values.

-- repeated structure

select a+((z*2)-1), b+((z*2)-1) from foo;

-- compressed?

select a2, b2 from (select a+tmp as a2, b+tmp as b2, (z*2)-1 as tmp from foo);

You can use as to name scalar values anywhere they appear. Except in a group by.

-- can't name this value

> select x2 from foo group by x+1 as x2;

ERROR: syntax error at or near "as"

LINE 1: select x2 from foo group by x+1 as x2;

-- sprinkle some more select on it

> select x2 from (select x+1 as x2 from foo) group by x2;

?column?

----------

(0 rows)

Rather than fix this bizarre oversight, the SQL spec allows a novel form of variable naming - you can refer to a column by using an expression which produces the same parse tree as the one that produced the column.

-- this magically works, even though x is not in scope in the select

> select (x + 1)*2 from foo group by x+1;

?column?

----------

(0 rows)

-- but this doesn't, because it isn't the same parse tree

> select (x + +1)*2 from foo group by x+1;

ERROR: column "foo.x" must appear in the GROUP BY clause or be used in an aggregate function

LINE 1: select (x + +1)*2 from foo group by x+1;

^

Of course, you can't use this feature across any kind of syntactic boundary. If you wanted to, say, assign this table to a variable or pass it to a function, then you need to both repeat the expression and explicitly name it;

> with foo_plus as (select x+1 from foo group by x+1)

> select (x+1)*2 from foo_plus;

ERROR: column "x" does not exist

LINE 2: select (x+1)*2 from foo_plus;

^

> with foo_plus as (select x+1 as x_plus from foo group by x+1)

> select x_plus*2 from foo_plus;

?column?

----------

(0 rows)

SQL was first used in the early 70s, but if your repeated value was a table then you were out of luck until CTEs were added in SQL:99.

-- repeated structure

select *

from

(select x, x+1 as x2 from foo) as foo1

left join

(select x, x+1 as x2 from foo) as foo2

on

foo1.x2 = foo2.x;

-- compressed?

with foo_plus as

(select x, x+1 as x2 from foo)

select *

from

foo_plus as foo1

left join

foo_plus as foo2

on

foo1.x2 = foo2.x;

Functions

Similarly, if your repeated calculations have different inputs then you were out of luck until scalar functions were added in SQL:99.

-- repeated structure

select a+((x*2)-1), b+((y*2)-1) from foo;

-- compressed?

create function bar(integer, integer) returns integer

as 'select $1+(($2*2)-1);'

language sql;

select bar(a,x), bar(b,y) from foo;

Functions that return tables weren't added until SQL:2003.

-- repeated structure

(select x from foo)

union

(select x+1 from foo)

union

(select x+2 from foo)

union

(select x from bar)

union

(select x+1 from bar)

union

(select x+2 from bar);

-- compressed?

create function increments(integer) returns setof integer

as $$

(select $1)

union

(select $1+1)

union

(select $1+2);

$$

language sql;

(select increments(x) from foo)

union

(select increments(x) from bar);

What if you want to compress a repeated calculation that produces more than one table as a result? Tough!

What if you want to compress a repeated calculation where one of the inputs is a table? The spec doesn't explicitly disallow this, but it isn't widely supported. SQL server can do it with this lovely syntax:

-- compressed?

create type foo_like as table (x int);

create function increments(@foo foo_like readonly) returns table

as return

(select x from @foo)

union

(select x+1 from @foo)

union

(select x+2 from @foo);

declare @foo as foo_like;

insert into @foo select * from foo;

declare @bar as foo_like;

insert into @bar select * from bar;

increments(@foo) union increments(@bar);

Aside from the weird insistence that we can't just pass a table directly to our function, this example points to a more general problem: column names are part of types. If in our example bar happened to have a different column name then we would have had to write:

increments(@foo) union increments(select y as x from @bar)

Since columns names aren't themselves first-class this makes it hard to compress repeated structure that happens to involve different names:

-- repeated structure

select a,b,c,x,y,z from foo order by a,b,c,x,y,z;

-- fantasy land

with ps as (columns 'a,b,c,x,y,z')

select $ps from foo order by $ps

The same is true of windows, collations, string encodings, the part argument to extract ... pretty much anything that involves one of the several hundred SQL keywords.

Functions and types are also not first-class, so repeated structures involving different functions or types can't be compressed.

Expression substitution

To be able to compress repeated structure we must be able to replace the verbose version with the compressed version. In many languages, there is a principle that it's always possible to replace any expression with another expression that has the same value. SQL breaks this principle in (at least) two ways.

Firstly, it's only possible to substitute one expression for another when they are both the same type of expression. SQL has statements (DDL), table expressions and scalar expressions.

Using a scalar expression inside a table expression requires first wrapping the entire thing with a new select.

Using a table expression inside a scalar expression is generally not possible, unless the table expression returns only 1 column and either a) the table expression is guaranteed to return at most 1 row or b) your usage fits into one of the hard-coded patterns such as exists. Otherwise, as we saw in the most-highly-paid-employee example earlier, it must be rewritten as a lateral join against the nearest enclosing table expression.

Secondly, table expressions aren't all made equal. Some table expressions depend not only on the value of an inner expression, but the syntax. For example:

-- this is fine - the spec allows `order by` to see inside the `(select ...)`

-- and make use of a column `y` that doesn't exist in the returned value

> (select x from foo) order by y;

x

---

3

(1 row)

-- same value in the inner expression

-- but the spec doesn't have a syntactic exception for this case

> (select x from (select x from foo) as foo2) order by y;

ERROR: column "y" does not exist

LINE 1: (select x from (select x from foo) as foo2) order by y;

In such cases it's not possible to compress repeated structure without first rewriting the query to explicitly select and then drop the magic column:

select x from ((select x,y from foo) order by y);

Non-porous

I took the term 'porous' from Some Were Meant For C, where Stephen Kell argues that the endurance of c is down to it's extreme openness to interaction with other systems via foreign memory, FFI, dynamic linking etc. He contrasts this with managed languages which don't allow touching anything in the entire memory space without first notifying the GC, have their own internal notions of namespaces and linking which they don't expose to the outside world, have closed build systems which are hard to interface with other languages' build systems etc.

For non-porous languages to succeed they have to eat the whole world - gaining enough users that the entire programming ecosystem can be duplicated within their walled garden. But porous languages can easily interact with existing systems and make use of existing libraries and tools.

Whether or not you like this argument as applied to c, the notion of porousness itself is a useful lens for system design. When we apply it to SQL databases, we see while individual databases are often porous in many aspects of their design, the mechanisms are almost always not portable. So while individual databases can be extended in many ways, the extensions can't be shared between databases easily and the SQL spec is still left trying to eat the whole world.

Language level

Most SQL databases have language-level escape hatches for defining new types and functions via a mature programming language (usually c). The syntax for declaring these in SQL is defined in the spec but the c interface and calling convention is not, so these are not portable across different databases.

-- sql side

CREATE FUNCTION add_one(integer) RETURNS integer

AS 'DIRECTORY/funcs', 'add_one'

LANGUAGE C STRICT;

// c side

#include "postgres.h"

#include <string.h>

#include "fmgr.h"

#include "utils/geo_decls.h"

PG_MODULE_MAGIC;

PG_FUNCTION_INFO_V1(add_one);

Datum

add_one(PG_FUNCTION_ARGS)

{

int32 arg = PG_GETARG_INT32(0);

PG_RETURN_INT32(arg + 1);

}

Runtime level

Many SQL databases also have runtime-level extension mechanisms for creating new index types and storage methods (eg postgis) and also for supplying hints to the optimizer. Again, these extensions are not portable across different implementations. At this level it's hard to see how they could be, as they can be deeply entangled with design decisions in the database runtime, but it's worth noting that if they were portable then much of the SQL spec would not need to exist.

The SQL spec also has an extension SQL/MED which defines how to query data that isn't owned by the database, but it isn't widely or portably implemented.

Interface level

At the interface level, the status quo is much worse. Each database has a completely different interface protocol.

The protocols I'm familiar with are all ordered, synchronous and allow returning only one relation at a time. Many don't even support pipelining. For a long time SQL also lacked any way to return nested structures and even now (with json support) it's incredibly verbose.

This meant that if you wanted to return, say, a list of user profiles and their followers, you would have to make multiple round-trips to the database. Latency considerations make this unfeasible over longer distances. This practically mandates the existence of an application layer whose main purpose is to coalesce multiple database queries and reassemble their nested structure using hand-written joins over the output relations - duplicating work that the database is supposed to be good at.

Protocols also typically return metadata as text in an unspecified format with no parser supplied (even if there is a binary protocol for SQL values, metadata is still typically returned as a 1-row 1-column table containing a string). This makes it harder than necessary to build any kind of tooling outside of the database. Eg if we wanted to parse plans and verify that they don't contain any table scans or nested loops.

Similarly, SQL is submitted to the database as a text format identical to what the programmer would type. Since the syntax is so complicated, it's difficult for other languages to embed, validate and escape SQL queries and to figure out what types they return. (Query parameters are not a panacea for escaping - often you need to vary query structure depending on user input, not just values).

SQL databases are also typically monolithic. You can't, for example, just send a query plan directly to postgres. Or call the planner as a library to help make operational forecasts based on projected future workloads. Looking at the value unlocked by eg pg_query gives the sense that there could be a lot to gain by exposing more of the innards of SQL systems.

Complexity drag

In modern programming languages, the language itself consists of a small number of carefully chosen primitives. Programmers combine these to build up the rest of the functionality, which can be shared in the form of libraries. This lowers the burden on the language designers to foresee every possible need and allows new implementations to reuse existing functionality. Eg if you implement a new javascript interpreter, you get the whole javascript ecosystem for free.

Because SQL is so inexpressive, incompressible and non-porous it was never able to develop a library ecosystem. Instead, any new functionality that is regularly needed is added to the spec, often with it's own custom syntax. So if you develop a new SQL implementation you must also implement the entire ecosystem from scratch too because users can't implement it themselves.

This results in an enormous language.

The core SQL language is defined in part 2 (of 9) of the SQL 2016 spec. Part 2 alone is 1732 pages. By way of comparison, the javascript 2021 spec is 879 pages and the c++ 2020 spec is 1853 pages.

But the SQL spec is not even complete!

A quick grep of the SQL standard indicates 411 occurrences of implementation-defined behavior. And not in some obscure corner cases, this includes basic language features. For a programming language that would be ridiculous. But for some reason people accept the fact that SQL is incredibly under-specified, and that it is impossible to write even relatively simple analytical queries in a way that is portable across database systems.

Notably, the spec does not define type inference at all, which means that the results of basic arithmetic are implementation-defined. Here is an example from the sqlite test suite in various databases:

sqlite> SELECT DISTINCT - + 34 + + - 26 + - 34 + - 34 + + COALESCE ( 93, COUNT ( * ) + + 44 - 16, - AVG ( + 86 ) + 12 ) / 86 * + 55 * + 46;

2402

postgres> SELECT DISTINCT - + 34 + + - 26 + - 34 + - 34 + + COALESCE ( 93, COUNT ( * ) + + 44 - 16, - AVG ( + 86 ) + 12 ) / 86 * + 55 * + 46;

?column?

-----------------------

2607.9302325581395290

(1 row)

mariadb> SELECT DISTINCT - + 34 + + - 26 + - 34 + - 34 + + COALESCE ( 93, COUNT ( * ) + + 44 - 16, - AVG ( + 86 ) + 12 ) / 86 * + 55 * + 46;

ERROR 1064 (42000): You have an error in your SQL syntax; check the manual that corresponds to your MariaDB server version for the right syntax to use near '* ) + + 44 - 16, - AVG ( + 86 ) + 12 ) / 86 * + 55 * + 46' at line 1

The spec also declares that certain operations should produce errors when evaluated, but since it doesn't define an evaluation order the decision is left down to the optimizer. A query that runs fine in your database today might return an error tomorrow on the same data if the optimizer produces a different plan.

sqlite> select count(foo.bar/0) from (select 1 as bar) as foo where foo.bar = 0;

0

postgres> select count(foo.bar/0) from (select 1 as bar) as foo where foo.bar = 0;

ERROR: division by zero

mariadb> select count(foo.bar/0) from (select 1 as bar) as foo where foo.bar = 0;

+------------------+

| count(foo.bar/0) |

+------------------+

| 0 |

+------------------+

1 row in set (0.001 sec)

And despite being enormous and not even definining the whole language, the spec still manages to define a language so anemic that every database ends up with a raft of non-standard extensions to compensate.

Even if all the flaws I listed in the previous sections were to be fixed in the future, SQL already ate a monstrous amount of complexity in workarounds for those flaws and that complexity will never be removed from the spec. This complexity has a huge impact on the effort required to implement a new SQL engine.

To take an example close to my heart: Differential dataflow is a dataflow engine that includes support for automatic parallel execution, horizontal scaling and incrementally maintained views. It totals ~16kloc and was mostly written by a single person. Materialize adds support for SQL and various data sources. To date, that has taken ~128kloc (not including dependencies) and I estimate ~15-20 engineer-years. Just converting SQL to the logical plan takes ~27kloc, more than than the entirety of differential dataflow.

Similarly, sqlite looks to have ~212kloc and duckdb ~141kloc. The count for duckdb doesn't even include the parser that they (sensibly) borrowed from postgres, which at ~47kloc is much larger than the entire ~30kloc codebase for lua.

Materialize passes more than 7 million tests, including the entire sqlite logic test suite and much of the cockroachdb logic test suite. And yet they are still discovering (my) bugs in such core components as name resolution, which in any sane language would be trivial.

The entire database industry is hauling a massive SQL-shaped parachute behind them. This complexity creates a drag on everything downstream.

Quality of implementation suffers

There is so much ground to cover that it's not possible to do a good job of all of it. Subqueries, for example, add some much-needed expressiveness to SQL but their use is usually not recommended because most databases optimize them poorly or not at all.

This affects UX too. Every SQL database I've used has terrible syntax errors.

sqlite> with q17_part as (

...> select p_partkey from part where

...> p_brand = 'Brand#23'

...> and p_container = 'MED BOX'

...> ),

...> q17_avg as (

...> select l_partkey as t_partkey, 0.2 * avg(l_quantity) as t_avg_quantity

...> from lineitem

...> where l_partkey IN (select p_partkey from q17_part)

...> group by l_partkey

...> ),

...> q17_price as (

...> select

...> l_quantity,

...> l_partkey,

...> l_extendedprice

...> from

...> lineitem

...> where

...> l_partkey IN (select p_partkeyfrom q17_part)

...> ),

...> select cast(sum(l_extendedprice) / 7.0 as decimal(32,2)) as avg_yearly

...> from q17_avg, q17_price

...> where

...> t_partkey = l_partkey and l_quantity < t_avg_quantity;

Error: near "select": syntax error

But it's hard to produce good errors when your grammar contains 1732 non-terminals. And several hundred keywords. And allows using (some) keywords as identifiers. And contains many many ambiguities which mean that typos are often valid but nonsensical SQL.

Innovatation at the implementation level is gated

Incremental maintenance, parallel execution, provenance, equivalence checking, query synthesis etc. These show up in academic papers, produce demos for simplified subsets of SQL, and then disappear.

In the programming language world we have a smooth pipeline that takes basic research and applies it to increasingly realistic languages, eventually producing widely-used industrial-quality tools. But in the database world there is a missing step between demos on toy relational algebras and handling the enormity of SQL, down which most compelling research quietly plummets. Bringing anything novel to a usable level requires a substantial investment of time and money that most researchers simply don't have.

Portability is a myth

The spec is too large and too incomplete, and the incentives to follow the spec too weak. For example, the latest postgres docs note that "at the time of writing, no current version of any database management system claims full conformance to Core SQL:2016". It also lists a few dozen departures from the spec.

This is exacerbated by the fact that every database also has to invent myriad non-standard extensions to cover the weaknesses of standard SQL.

Where the average javascript program can be expected to work in any interpreter, and the average c program might need to macro-fy some compiler builtins, the average body of SQL queries will need serious editing to run on a different database and even then can't be expected to produce the same answers.

One of the big selling points for supporting SQL in a new database is that existing tools that emit SQL will be able to run unmodified. But in practice, such tools almost always end up maintaining separate backends for every dialect, so unless you match an existing database bug-for-bug you'll still have to add a new backend to every tool.

Similarly, users will be able to carry across some SQL knowledge, but will be regularly surprised by inconsistencies in syntax, semantics and the set of available types and functions. And unlike the programming language world they won't be able to carry across any existing code or libraries.

This means that the network effects of SQL are much weaker than they are for programming languages, which makes it all the more surprising that we have a bounty of programming languages but only one relational database language.

The application layer

The original idea of relational databases was that they would be queried directly from the client. With the rise of the web this idea died - SQL is too complex to be easily secured against adversarial input, cache invalidation for SQL queries is too hard, and there is no way to easily spawn background tasks (eg resizing images) or to communicate with the rest of the world (eg sending email). And the SQL language itself was not an appealing target for adding these capabilities.

So instead we added the 'application layer' - a process written in a reasonable programming language that would live between the database and the client and manage their communication. And we invented ORM to patch over the weaknesses of SQL, especially the lack of compressibility.

This move was necessary, but costly.

ORMs are prone to n+1 query bugs and feral concurrency. To rephrase, they are bad at efficiently querying data and bad at making use of transactions - two of the core features of relational databases.

As for the application layer: Converting queries into rest endpoints by hand is a lot of error-prone boilerplate work. Managing cache invalidation by hand leads to a steady supply of bugs. If endpoints are too fine-grained then clients have to make multiple roundtrip calls, but if they're too coarse then clients waste bandwidth on data they didn't need. And there is no hope of automatically notifying clients when the result of their query has changed.

The success of GraphQL shows that these pains are real and that people really do want to issue rich queries directly from the client. Compared to SQL, GraphQL is substantially easier to implement, easier to cache, has a much smaller attack surface, has various mechanisms for compressing common patterns, makes it easy to follow foreign keys and return nested results, has first-class mechanisms for interacting with foreign code and with the outside world, has a rich type system (with union types!), and is easy to embed in other languages.

Similarly for firebase (before it was acqui-smothered by google). It dropped the entire application layer and offered streaming updates to client-side queries, built-in access control, client-side caching etc. Despite offering very little in the way of runtime innovation compared to existing databases, it was able to succesfully compete by recognizing that the current division of database + sql + orm + application-layer is a historical accident and can be dramatically simplified.

The overall vibe of the NoSQL years was "relations bad, objects good". I fear that what many researchers and developers are taking away from the success of GraphQL and co is but a minor update - "relations bad, ~objects~ graphs good".

This is a mistake. GraphQL is still more or less a relational model, as evidenced by the fact that it's typically backed by wrappers like hasura that allow taking advantage of the mature runtimes of relational databases. The key to the success of GraphQL was not doing away with relations, but recognizing and fixing the real flaws in SQL that were hobbling relational databases, as well as unbundling the query language from a single monolithic storage and execution engine.

After SQL?

To summarize:

- Design flaws in the SQL language resulted in a language with no library ecosystem and a burdensome spec which limits innovation.

- Additional design flaws in SQL database interfaces resulted in moving as much logic as possible to the application layer and limiting the use of the most valuable features of the database.

- It's probably too late to fix either of these.

But the idea of modelling data with a declarative disorderly language is still valuable. Maybe more so than ever, given the trends in hardware. What should a new language learn from SQL's successes and mistakes?

We can get pretty far by just negating every mistake listed in this post, while ensuring we retain the ability to produce and optimize query plans:

- Start with the structure that all modern languages have converged towards.

- Everything is an expression.

- Variables and functions have compact syntax.

- Few keywords - most things are stdlib functions rather than builtin syntax.

- Have an explicit type system rather than totally disjoint syntax for scalar expressions vs table expressions.

- Ensure that it's always possible to replace a given expression with another expression that has the same type and value.

- Define a (non-implementation specific) system for distributing and loading (and unloading!) libraries.

- Keep the spec simple and complete.

- Simple denotational semantics for the core language.

- Completely specify type inference, error semantics etc.

- Should be possible for an experienced engineer to throw together a slow but correct interpreter in a week or two.

- Encode semantics in a model checker or theorem prover to eg test optimizations. Ship this with the spec.

- Lean on wasm as an extension language - avoids having to spec arithemetic, strings etc if they can be defined as a library over some bits type.

- Make it compressible.

- Allow functions to take relations and other functions are arguments (can be erased by specialization before planning, ala rust or julia).

- Allow functions to operate on relations polymorphically (ie without having to fix the columns and types when writing the function).

- Make column names, orderings, collations, window specifications etc first-class values rather than just syntax (can use staging ala zig's comptime if these need to be constant at planning time).

- Compact syntax for simple joins (eg snowflake schemas, graph traversal).

- True recursion / fixpoints (allows expressing iterative algorithms like parsing).

- Make it porous.

- Allow defining new types, functions, indexes, plan operators etc via wasm plugins (with the calling convention etc in the spec).

- Expose plans, hints etc via api (not via strings).

- Spec both a human-friendly encoding and a tooling-friendly encoding (probably text vs binary like wasm). Ship an embedabble library that does parsing and type inference.

- Make returning nested structures (eg json) ergonomic, or at least allow returning multiple relations.

- Create a subset of the language that can be easily verified to run in reasonable time (eg no table scans, no nested loops).

- Allow exposing subset to clients via graphql-like authorization rules.

- Better layering.

- Separate as much as possible out into embeddable libraries (ala pg_query).

- Expose storage, transaction, execution as apis. The database server just receives and executes wasm against these apis.

- Distribute query parser/planner/compiler as a library so clients can choose to use modified versions to produce wasm for the server.

- Strategies for actually getting people to use the thing are much harder.

Tackling the entire stack at once seems challenging. Rethinkdb died. Datomic is alive but the company was acquihired. Neo4j, on the other hand, seems to be catnip for investors, so who knows.

A safer approach is to first piggy-back on existing databases runtime. EdgeDB uses the postgres runtime. Logica compiles to SQL. GraphQL has compilers for many different query languages.

Another option is to find an untapped niche and work outward from there. I haven't seen this done yet, but there are a lot of relational-ish query niches. Pandas targets data cleaning/analysis. Datascript is a front-end database. Bloom targets distributed systems algorithms. Semmle targets code analysis. Other potential niches include embedded databases in applications (ala fossils use of sqlite), incremental functions from state to UI, querying as an interface to the state of complex programs etc.

In a niche with less competition you could first grow the language and then try to expand the runtime outwards to cover more potential usecases, similar to how sqlite started as a tcl extension and ended up becoming the defacto standard for application-embedded databases, a common choice for data publishing format, and a backend for a variety of data-processing tools.

Questions? Comments? Just want to chat? jamie@scattered-thoughts.net

My work is currently funded by sharing thoughts and work in progress with people who sponsor me on github.

https://blog.healthchecks.io/2018/10/investigating-gmails-this-message-seems-dangerous/

Investigating Gmail’s “This message seems dangerous”

By Pēteris Caune / October 26, 2018

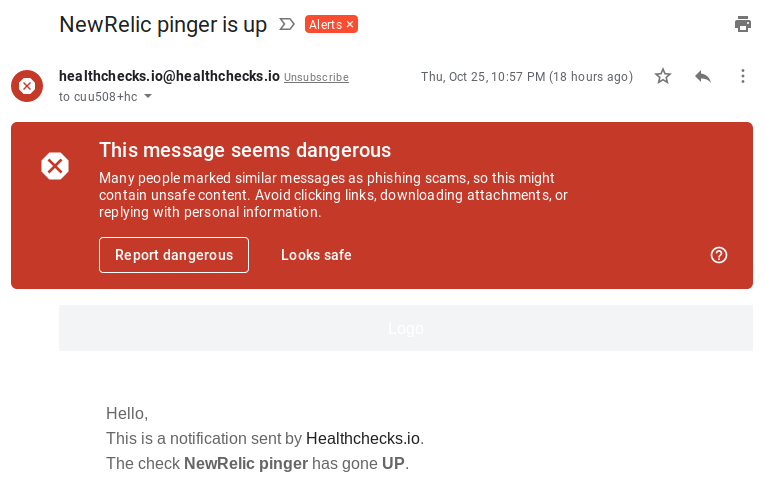

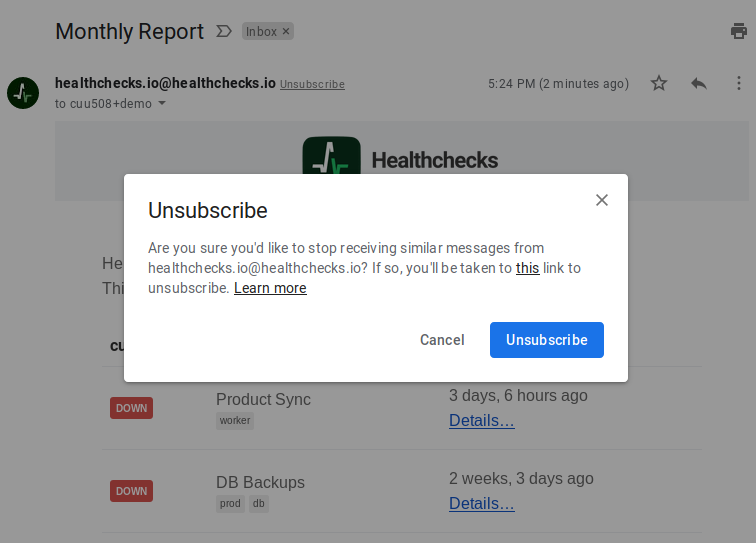

I’ve been receiving multiple user reports that Gmail shows a red “This message seems dangerous” banner above some of the emails sent by Healthchecks.io. I’ve even seen some myself:

The banner goes away after pressing “Looks safe”. And then, some time and some emails later, it is back.